In the world of modern TCP, window scale multipliers are a commodity often ignored until it’s not there. The window is the number of bytes that the receiving TCP is prepared to receive. RFC 793 states that the window field is 16 bits. With 16 bits, the receiving TCP can indicate that it is willing and able to receive up to 65535 bytes. 65535 outstanding bytes was probably more than sufficient in the systems of 1981 when TCP was standardized, it is severely insufficient for today’s throughput requirements.

TCP window scaling was standardized in May 1992 in RFC 1323. Window scaling enables a TCP option to multiply the indicated window to increase TCP performance. If you wish to know the specifics of window scaling, I recommend reading the RFC. One important factor is that for window scaling to be utilized, both the sending and receiving TCP must indicate that they are willing to support scaling.

I reused the same home lab setup I used in a previous article where I compared the performance of cubic and BBR TCPs with iperf. The source, transit node, and destination are Raspberry Pis connected via 1Gbps ethernet and a switch. I used the Linux Traffic Control feature to configure various levels of network delay in the path between the sender and receiver. Static routes were configured on the source and destination for reachability to each other.

To get started, I took a baseline iperf test between the Pis with no additional network delay injected. The Average round trip time (RTT) was 0.378 ms and average throughput was 912 Mbps. A SPAN session was enabled on the switch with the output fed into Wireshark on my laptop.

Confirmation that TCP window scaling is enabled:

pi@routingloopi:/etc $ sysctl net.ipv4.tcp_window_scaling

net.ipv4.tcp_window_scaling = 1

Validation of RTT:

pi@routerberrypi:/etc $ ping 10.0.0.5 -c 10

PING 10.0.0.5 (10.0.0.5) 56(84) bytes of data.

64 bytes from 10.0.0.5: icmp_seq=1 ttl=63 time=0.440 ms

64 bytes from 10.0.0.5: icmp_seq=2 ttl=63 time=0.358 ms

64 bytes from 10.0.0.5: icmp_seq=3 ttl=63 time=0.373 ms

64 bytes from 10.0.0.5: icmp_seq=4 ttl=63 time=0.365 ms

64 bytes from 10.0.0.5: icmp_seq=5 ttl=63 time=0.364 ms

64 bytes from 10.0.0.5: icmp_seq=6 ttl=63 time=0.363 ms

64 bytes from 10.0.0.5: icmp_seq=7 ttl=63 time=0.377 ms

64 bytes from 10.0.0.5: icmp_seq=8 ttl=63 time=0.384 ms

64 bytes from 10.0.0.5: icmp_seq=9 ttl=63 time=0.381 ms

64 bytes from 10.0.0.5: icmp_seq=10 ttl=63 time=0.379 ms

--- 10.0.0.5 ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 381ms

rtt min/avg/max/mdev = 0.358/0.378/0.440/0.028 ms

iperf results:

Accepted connection from 10.0.0.1, port 47948

[ 5] local 10.0.0.5 port 5201 connected to 10.0.0.1 port 47950

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 107 MBytes 897 Mbits/sec

[ 5] 1.00-2.00 sec 109 MBytes 914 Mbits/sec

[ 5] 2.00-3.00 sec 109 MBytes 914 Mbits/sec

[ 5] 3.00-4.00 sec 109 MBytes 914 Mbits/sec

[ 5] 4.00-5.00 sec 109 MBytes 914 Mbits/sec

[ 5] 5.00-6.00 sec 109 MBytes 914 Mbits/sec

[ 5] 6.00-7.00 sec 109 MBytes 913 Mbits/sec

[ 5] 7.00-8.00 sec 109 MBytes 914 Mbits/sec

[ 5] 8.00-9.00 sec 109 MBytes 914 Mbits/sec

[ 5] 9.00-10.00 sec 109 MBytes 914 Mbits/sec

[ 5] 10.00-10.00 sec 184 KBytes 902 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.00 sec 1.06 GBytes 912 Mbits/sec receiver

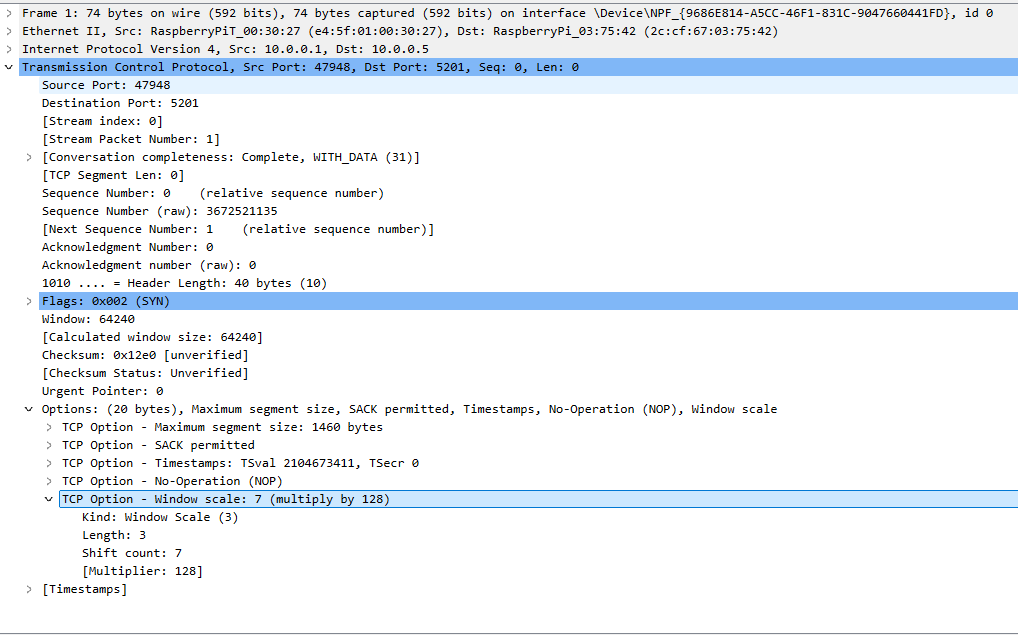

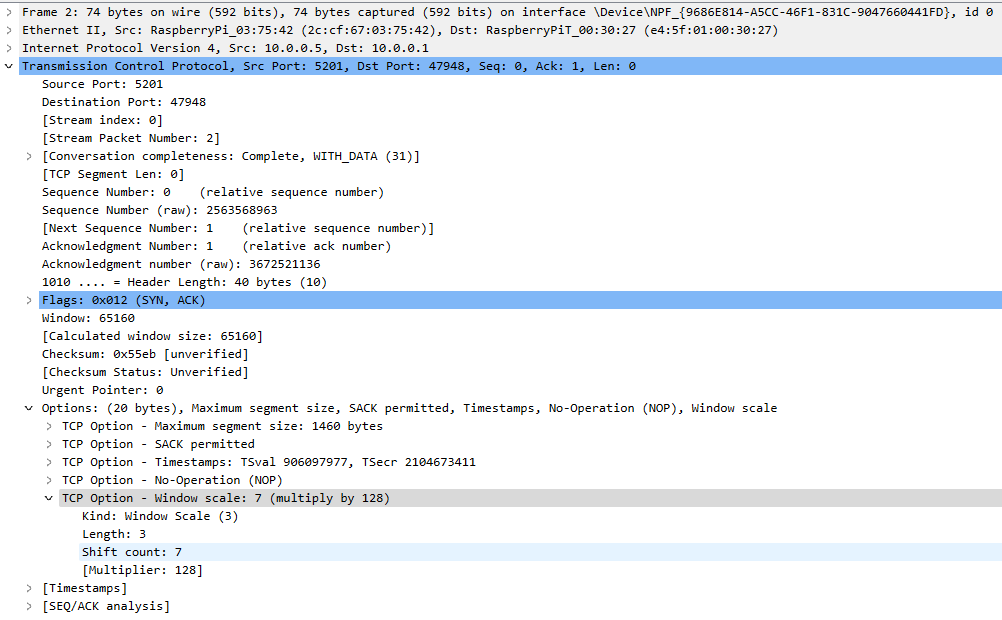

The packet capture snippets below confirm that the source and destination are using TCP window scaling. Note that the window scale multiplier is only sent in SYN packets, they are only exchanged during the handshake.

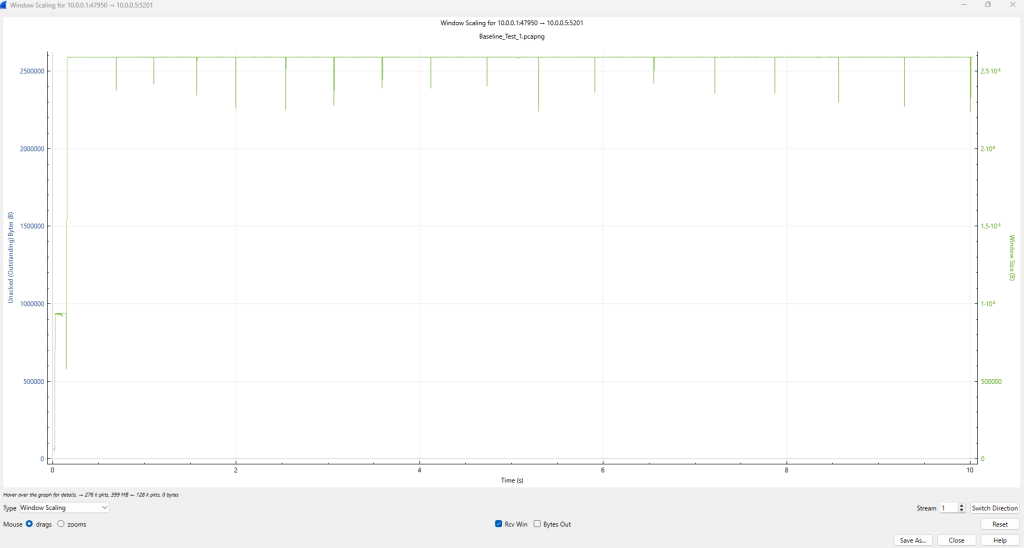

The window scale in this test exceeded 2.5*10^6th bytes.

For the next test, window scaling disabled, standard RTT.

pi@routerberrypi:/etc $ sudo sysctl -w net.ipv4.tcp_window_scaling=0

net.ipv4.tcp_window_scaling = 0

pi@routerberrypi:/etc $ sysctl net.ipv4.tcp_window_scaling

net.ipv4.tcp_window_scaling = 0

Ping to validate the RTT:

pi@routerberrypi:/etc $ ping 10.0.0.5 -c 10

PING 10.0.0.5 (10.0.0.5) 56(84) bytes of data.

64 bytes from 10.0.0.5: icmp_seq=1 ttl=63 time=0.414 ms

~ lines omitted for brevity ~

--- 10.0.0.5 ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 398ms

rtt min/avg/max/mdev = 0.346/0.388/0.478/0.046 ms

iperf results:

pi@routingloopi:/etc $ iperf3 -s

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 10.0.0.1, port 47956

[ 5] local 10.0.0.5 port 5201 connected to 10.0.0.1 port 47958

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 89.6 MBytes 752 Mbits/sec

[ 5] 1.00-2.00 sec 94.1 MBytes 789 Mbits/sec

[ 5] 2.00-3.00 sec 94.0 MBytes 789 Mbits/sec

[ 5] 3.00-4.00 sec 93.9 MBytes 787 Mbits/sec

[ 5] 4.00-5.00 sec 94.0 MBytes 789 Mbits/sec

[ 5] 5.00-6.00 sec 93.8 MBytes 787 Mbits/sec

[ 5] 6.00-7.00 sec 93.5 MBytes 784 Mbits/sec

[ 5] 7.00-8.00 sec 94.3 MBytes 791 Mbits/sec

[ 5] 8.00-9.00 sec 93.6 MBytes 785 Mbits/sec

[ 5] 9.00-10.00 sec 94.2 MBytes 791 Mbits/sec

[ 5] 10.00-10.00 sec 127 KBytes 775 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.00 sec 935 MBytes 784 Mbits/sec receiver

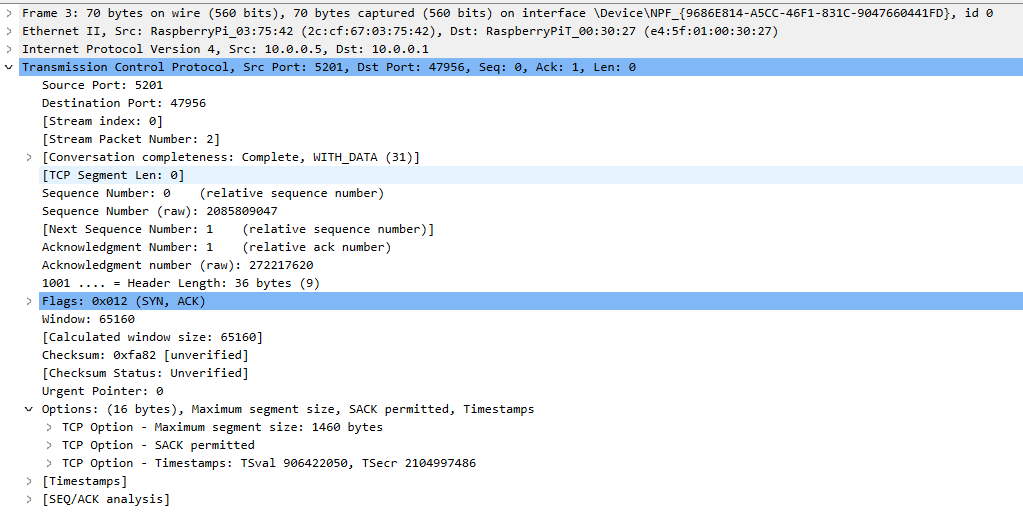

The packet capture snippets below confirm that the TCP window scale option is not transmitted. Note that TCP window scaling was only disabled on the source host but because both sides must support the option for it to be used, the destination did not set the option in the SYN/ACK.

Without scaling, we can see the receive window stuck at about 65k.

By disabling TCP window scaling, we see a drop from 912 Mbps to 784 Mbps. That is an approximate 14 percent reduction of throughput. 784 Mbps still sounds pretty good, and it is in many circumstances. The alarming results are in the next several tests when there is additional delay injected into the network.

For the next test, I disabled TCP window scaling and configured additional 25 milliseconds of delay on the transit node Raspberry using the command show below.

tc qdisc add dev eth0.10 root netem delay 25msJust like before, I ran some pings to validate latency and then initiated an iperf test.

pi@routerberrypi:/etc $ ping 10.0.0.5 -c 10

PING 10.0.0.5 (10.0.0.5) 56(84) bytes of data.

64 bytes from 10.0.0.5: icmp_seq=1 ttl=63 time=25.5 ms

~ lines omitted for brevity ~

--- 10.0.0.5 ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 23ms

rtt min/avg/max/mdev = 25.366/25.392/25.498/0.204 ms

iperf results:

[ 5] local 10.0.0.5 port 5201 connected to 10.0.0.1 port 47962

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 2.18 MBytes 18.3 Mbits/sec

[ 5] 1.00-2.00 sec 2.39 MBytes 20.1 Mbits/sec

[ 5] 2.00-3.00 sec 2.38 MBytes 20.0 Mbits/sec

[ 5] 3.00-4.00 sec 2.38 MBytes 20.0 Mbits/sec

[ 5] 4.00-5.00 sec 2.37 MBytes 19.9 Mbits/sec

[ 5] 5.00-6.00 sec 2.39 MBytes 20.0 Mbits/sec

[ 5] 6.00-7.00 sec 2.38 MBytes 20.0 Mbits/sec

[ 5] 7.00-8.00 sec 2.38 MBytes 20.0 Mbits/sec

[ 5] 8.00-9.00 sec 2.39 MBytes 20.0 Mbits/sec

[ 5] 9.00-10.00 sec 2.38 MBytes 19.9 Mbits/sec

[ 5] 10.00-10.03 sec 62.2 KBytes 20.1 Mbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.03 sec 23.7 MBytes 19.8 Mbits/sec receiver

Adding 25 ms delay dropped throughput all the way to 19.8 Mbps! This result was shocking enough that I ran the test again but had similar results. TCP window scaling makes a huge difference over WAN links!

To prove the worthiness of TCP window scaling over high delay paths, I enabled it and maintained 25ms of delay.

pi@routerberrypi:/etc $ sudo sysctl -w net.ipv4.tcp_window_scaling=1

net.ipv4.tcp_window_scaling = 1

pi@routerberrypi:/etc $ ping 10.0.0.5 -c 10

PING 10.0.0.5 (10.0.0.5) 56(84) bytes of data.

64 bytes from 10.0.0.5: icmp_seq=1 ttl=63 time=25.5 ms

~ lines omitted for brevity ~

--- 10.0.0.5 ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 23ms

rtt min/avg/max/mdev = 25.359/25.396/25.478/0.178 ms

iperf results:

Accepted connection from 10.0.0.1, port 47964

[ 5] local 10.0.0.5 port 5201 connected to 10.0.0.1 port 47966

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 71.3 MBytes 598 Mbits/sec

[ 5] 1.00-2.01 sec 63.7 MBytes 530 Mbits/sec

[ 5] 2.01-3.00 sec 80.0 MBytes 676 Mbits/sec

[ 5] 3.00-4.00 sec 92.1 MBytes 772 Mbits/sec

[ 5] 4.00-5.00 sec 94.1 MBytes 789 Mbits/sec

[ 5] 5.00-6.00 sec 94.7 MBytes 794 Mbits/sec

[ 5] 6.00-7.00 sec 94.7 MBytes 795 Mbits/sec

[ 5] 7.00-8.00 sec 94.7 MBytes 794 Mbits/sec

[ 5] 8.00-9.00 sec 94.7 MBytes 794 Mbits/sec

[ 5] 9.00-10.00 sec 94.7 MBytes 794 Mbits/sec

[ 5] 10.00-10.03 sec 2.42 MBytes 793 Mbits/sec

[ ID] Interval Transfer Bitrate

[ 5] 0.00-10.03 sec 877 MBytes 734 Mbits/sec receiver

Simply enabling the TCP window scaling option increased throughput to 734 Mbps over the 25.3 ms path! That is roughly 97.3 percent better!

I continued to experiment with various levels of delay with and without TCP window scaling enabled. Increasing delay between two TCPs will always degrade throughput and this was no exception. Enabling window scaling continued to provide about 97 percent increase in throughput until I went extreme with 1000 ms latency. Below is a screenshot the results for the remaining test.

Modern end host should have TCP enabled by default. What might catch you off guard is the default behavior of some proxies, load balancers, etc. Before enabling TCP window scaling in your production environment, be sure to consider the additional memory overhead of larger TCP receive buffers.

Thanks for stopping by!